About

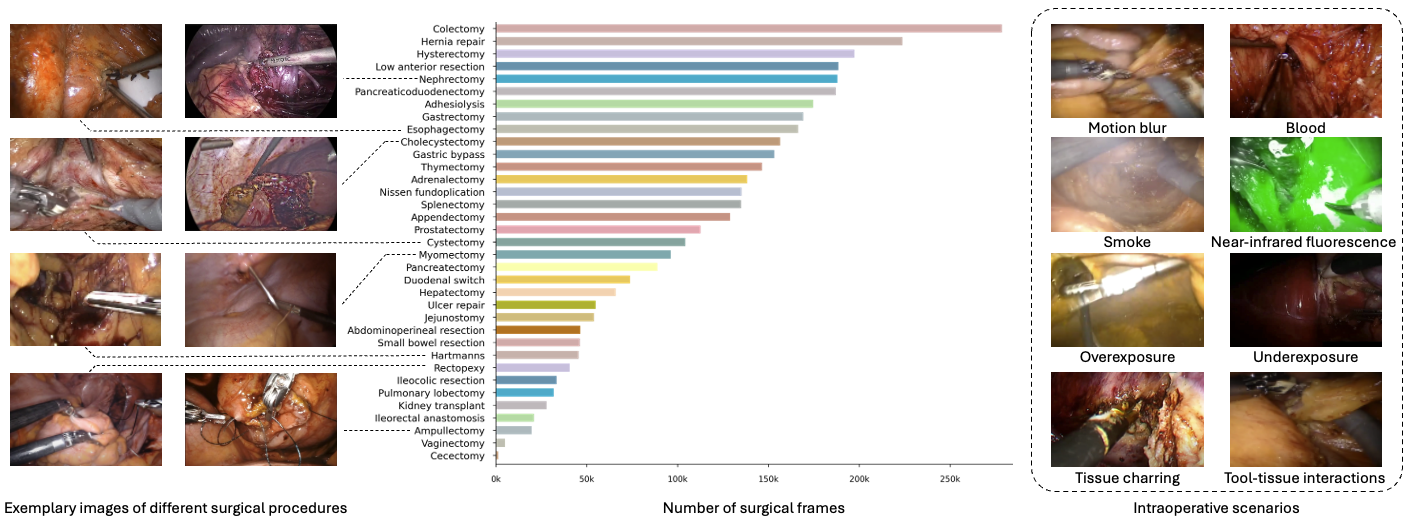

LEMON dataset is a large video database for computer vision applications in surgery, comprising more than 4K high-resolution surgical videos totaling 938 hours (85 million frames) of high-quality footage corresponding to 35 different surgical procedures, including videos from robotic-assisted surgeries and non-robotic traditional laparoscopies. Each video is annotated with procedure type (e.g., gastric bypass or ulcer repair) and surgery type (i.e., robotic or manual).

The diversity and procedure prevalence in LEMON is as follows:

Why LEMON?

To develop a robust surgical foundation model, it is essential to create a comprehensive, large-scale dataset of diverse surgical images that covers the whole spectrum of minimally invasive surgical procedures executed within the operating room. To fulfill this need we propose LEMON, a dataset that contains 4K videos totaling 938 hours (85 million frames) corresponding to 35 different surgical procedures, including videos from robotic-assisted surgeries and non-robotic traditional laparoscopies

Annotation

- Multi-label surgical procedure type: each video is annotated with multiple surgical procedure labels (surgeons sometimes perform two procedures in a single intervention), covering a total of 35 distinct procedures.

- Binary surgery type: each video is annotated with binary surgery type, indicating whether it is robotic or non-robotic.

Research Team

- Chengan Che, King’s College London

- Chao Wang, King’s College London

- Prof. Tom Vercauteren, King’s College London

- Dr. Sophia Tsoka, King’s College London

- Dr. Luis Carlos Garcia Peraza Herrera, King’s College London

Dataset and Licensing

We adhere to the same practices as other datasets created from YouTube and various sources on the Web, such as ImageNet, Kinetics, YouTube-VIS, YouTube-8M, Insect-1M, Moments-in-Time, Tai-Chi-HD, HD-Villa-100M, and AVSpeech. Specifically, we provide the code to download and generate the LEMON dataset, a list of links to the original YouTube videos, and the corresponding annotations. Researchers working in academic institutions can request direct access to the full LEMON image dataset (at 1fps) in LMDB format for non-commercial purposes. The full video dataset will be released soon! We also provide a request form for the YouTube video authors to opt out of our LEMON dataset. The LEMON dataset is provided under the Creative Commons Attribution 4.0 International (CC BY 4.0) license.

Sponsors

We are grateful to support from King’s College London which enabled this project.